https://www.christian-rossow.de

If you can't explain it simply, you don't understand it well enough.

Report - Paper 'Prudent Practices for Designing Malware Experiments: Status Quo and Outlook' accepted at IEEE S&P 2012

In this paper we explore issues relating to prudent experimental

evaluation for projects that use malware-execution

datasets. Our interest in the topic arose while analyzing

malware and researching detection approaches ourselves,

during which we discovered that well-working lab experiments

could perform much worse in real-world evaluations.

Investigating these difficulties led us to identify and explore

the pitfalls that caused them. For example, we observed that

even a slight artifact in a malware dataset can inadvertently

lead to unforeseen performance degradation in practice.

We summarized our paper as follows:

Malware researchers rely on the observation of

malicious code in execution to collect datasets for a wide array

of experiments, including generation of detection models, study

of longitudinal behavior, and validation of prior research. For

such research to reflect prudent science, the work needs to

address a number of concerns relating to the correct and

representative use of the datasets, presentation of methodology

in a fashion sufficiently transparent to enable reproducibility,

and due consideration of the need not to harm others.

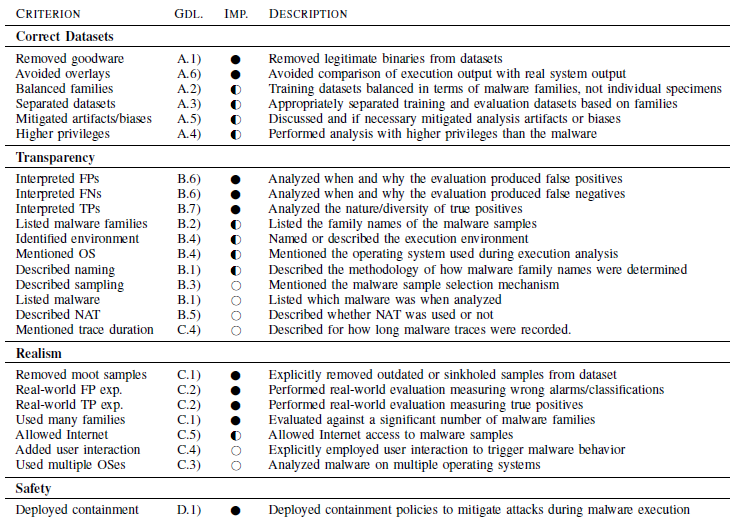

In this paper we study the methodological rigor and

prudence in 36 academic publications from 2006 to 2011 that

rely on malware execution. 40% of these papers appeared

in the 6 highest-ranked academic security conferences. We

find frequent shortcomings, including problematic assumptions

regarding the use of execution-driven datasets (25% of the

papers), absence of description of security precautions taken

during experiments (71% of the articles), and oftentimes insufficient

description of the experimental setup. Deficiencies occur

in top-tier venues and elsewhere alike, highlighting a need for

the community to improve its handling of malware datasets. In

the hope of aiding authors, reviewers, and readers, we frame

guidelines regarding transparency, realism, correctness, and

safety for collecting and using malware datasets.

I will present our work at IEEE S&P in San Francisco on Monday,

21.05.2012 (05/21/2012).

We will soon launch a website that will allow the community to

discuss aspects of prudent malware experimentation. In particular,

this website will ask experts like you for feedback on how we -

as a community - can further strive towards prudent malware

experiments. More here soon!

Yours

Christian

|